Tags

3D model, Animation, Blender, JavaScript, Morph Target Animation, Oculus Quest 2, Positional Audio, React Three Fiber, Skeletal Animation, virtual reality, VR, web

React Three Fiber can be used to render a VR experience in the web browser. But how do we sync animation and audio?

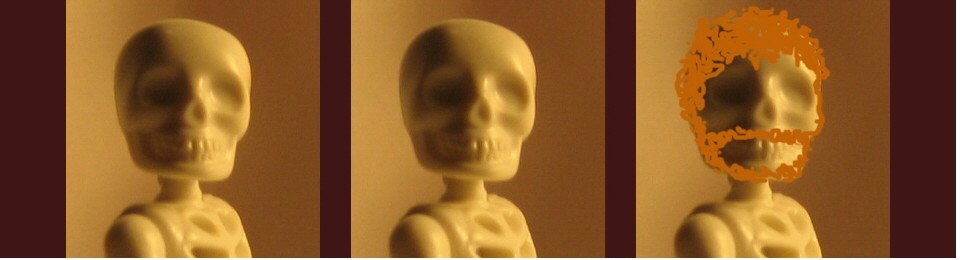

We can create all our animation in Blender. Here’s a Blender scene I’ve been working on recently:

As you can see, we have two characters. They both have bones, providing skeletal animation to move their bodies. They also have shape keys, providing morph target animation to move their mouths.

We can export the two characters separately from Blender, so as to import each character into React Three Fiber as a component.

First problem in syncing animation with audio: how can we get a character’s speech to emit from their mouth as the skeletal animation moves them about the scene? A solution is to attach the positional audio to the neck bone of the character on each frame:

// update positional audio (to neck bone positon)

nodes.Layer_1001.skeleton.bones[boneIndex].getWorldPosition(bonePosition)

positionalAudio.current.position.x = bonePosition.x

positionalAudio.current.position.y = bonePosition.y

positionalAudio.current.position.z = bonePosition.zNow wherever that character moves in the scene, the speech sticks with it!

Second problem in syncing animation with audio: how can we get a character’s speech to move their mouth with morph target animation? A solution is to use the frequency reading of an audio analyser to determine if the audio is above a certain threshold on each frame:

const analyser = useRef()

useEffect(() => void (analyser.current = new THREE.AudioAnalyser(positionalAudio.current, 32)), [])

useFrame((state, delta) => {

// use morph animation (if audio detected)

if (analyser.current) {

const data = analyser.current.getAverageFrequency()

if (data > 25.0) {Now whenever a character speaks, their mouth moves!

Here’s a video of the characters floating about in React Three Fiber, chatting away:

Animation and audio sync is incorporated into my Saltwash VR stereoscopic videos.