Tags

Cascade Classifier, Commando, Commodore 64, Computer Games, Google, Object Detection, OpenCV, Python, Raspberry Pi, Rob Hubbard, Robot, Text To Speech, VICE emulator, Webcam

Arkwood adores his retro computer games. His all-time favourite is Commando for the Commodore 64. ‘I love killing all those enemy soldiers, and the theme tune by Rob Hubbard is so brill I sometimes piss myself with excitement.’ You see, he really is smitten. Here is a photo of Arkwood in action:

Arkwood plays his old Commodore 64 games on a Windows 7 laptop, using the VICE emulator.

But there is one small problem – Arkwood cannot multitask. ‘I never remember to collect those extra grenades,’ he wailed. He’s talking about the following supplies:

‘Not to worry,’ I told him, ‘I will create a co-pilot for you. A robot that will inform you every time the grenade supplies appear on the screen.’

Our robot will consist of the following:

- Webcam for eyes – whilst Arkwood is playing Commando, the webcam will take photos of the laptop screen.

- Raspberry Pi for brain – the tiny Pi computer will receive frequent snaps of Arkwood’s gameplay from the webcam, attempting to detect grenade supplies.

- Speakers for mouth – if the Python code running on the Raspberry Pi manages to detect grenade supplies in a webcam photo, Arkwood will be alerted via a set of speakers attached to the Pi.

We will use OpenCV haar cascade classifiers to detect the grenade supplies in each webcam photo. My previous post, Guitar detection using OpenCV, provides detail of how to get started with creating classifiers. In order to collect 95 positive images of the grenade supplies, I put the computer game into cheat mode and took snaps of the laptop screen as I blasted my way through each level and the end-of-game bloodbath. Here’s the settings I used to train my grenade classifier for 12 stages:

perl createtrainsamples.pl positives.dat negatives.dat samples 500 "./opencv_createsamples -bgcolor 0 -bgthresh 0 -maxxangle 1.1 -maxyangle 1.1 maxzangle 0.5 -maxidev 40 -w 40 -h 33" opencv_haartraining -data haarcascade_grenade -vec samples.vec -bg negatives.dat -nstages 20 -nsplits 2 -minhitrate 0.999 -maxfalsealarm 0.5 -npos 500 -nneg 200 -w 40 -h 33 -nonsym -mem 2048 -mode ALL

Now, in order to alert Arkwood that grenade supplies have been found we use Google’s Text To Speech service to ‘talk’ to him through the speakers.

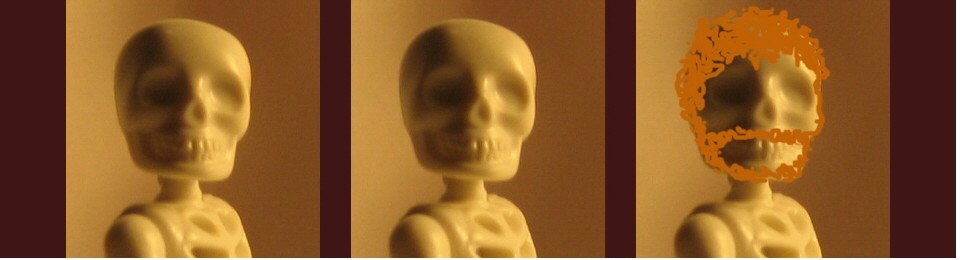

Great. Let’s give it a go! Arkwood starts playing a game of Commando on his laptop whilst his co-pilot robot fixes its webcam eyes on the screen, busy taking photos of his gameplay…

Hurray! The grenade supplies have been detected and our robot tells Arkwood to go get them. Now my buddy will never again forget to pick up his extra bombs.

‘There’s only one problem,’ he informs me, ‘I can’t play the game knowing someone is watching me. I get nervous. It’s like trying to pee at a public urinal when there is someone standing next to you.’

I was furious. ‘For fuck’s sake!’ I screamed, ‘Can’t you just pretend you’re listening to Rob Hubbard’s superb musical score. You’ll piss just fine.’

Ciao!

P.S.

Arkwood asked me to pass on the following Commodore 64 resources:

http://www.lemon64.com/

http://c64g.com/

And if you want to see Commando in all its graphical and musical glory, played by an expert, watch this YouTube video

P.P.S.

Here’s all the Python code. First the main program:

from game import Webcam, Detection

from speech import Speech

webcam = Webcam()

detection = Detection()

speech = Speech()

# play a game of Commando

while True:

# attempt to detect grenade supplies

image = webcam.read_image()

item_detected = detection.is_item_detected_in_image('haarcascade_grenade.xml', image)

# alert Arkwood of grenades

if item_detected:

speech.text_to_speech("Get the grenade supplies")

We simply loop, obtaining images from the webcam and attempting to detect grenade supplies. If grenades are detected then we alert Arkwood via some speakers.

Next up, the Webcam and Detection classes used by the main program:

import cv2

from datetime import datetime

class Webcam(object):

# read image from webcam

def read_image(self):

return cv2.VideoCapture(0).read()[1]

class Detection(object):

# is item detected in image

def is_item_detected_in_image(self, item_cascade_path, image):

# do detection

item_cascade = cv2.CascadeClassifier(item_cascade_path)

gray_image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

items = item_cascade.detectMultiScale(gray_image, scaleFactor=1.1, minNeighbors=40)

for (x,y,w,h) in items:

cv2.rectangle(image,(x,y),(x+w,y+h),(255,0,0),2)

# save image to disk

self._save_image(image)

# indicate whether item detected in image

return len(items) > 0

# save image to disk

def _save_image(self, img):

filename = datetime.now().strftime('%Y%m%d_%Hh%Mm%Ss%f') + '.jpg'

cv2.imwrite("WebCam/Detection/" + filename, img)

The Webcam class has one method which reads an image from the webcam.

The Detection class has a is_item_detected_in_image method which loads our classifier file, using it to attempt to detect grenade supplies in the webcam image. We draw a rectange on the image for any grenades detected. We also have a private _save_image method for saving each webcam image to disk for later inspection.

Finally, our Speech class, which makes use of Google’s Text To Speech service:

from subprocess import PIPE, call

import urllib

class Speech(object):

# converts text to speech

def text_to_speech(self, text):

try:

# truncate text as google only allows 100 chars

text = text[:100]

# encode the text

query = urllib.quote_plus(text)

# build endpoint

endpoint = "http://translate.google.com/translate_tts?tl=en&q=" + query

# debug

print(endpoint)

# get google to translate and mplayer to play

call(["mplayer", endpoint], shell=False, stdout=PIPE, stderr=PIPE)

except:

print ("Error translating text")

Google will return an audio file representing the text we sent it, and we can then use MPlayer to ‘talk’ to Arkwood through the speakers.

This is really, really cool. Your posts on Haar cascades are fantastic, please keep them up!

Thanks for the encouragement, Adrian. The cascades have worked out well here, though can be more challenging on other projects. Have started looking at HOG but need to put more time aside. Cheers.

HOG is definitely the way to go, I highly recommend checking them out. Much more powerful.