Tags

face detection, facial recognition, GitHub, Google, Haar Cascades, Intel, Itseez, motion detection, OpenCV, OpenCV Python Tutorials, Python, Raspberry Pi, Speech To Text, Text To Speech

Arkwood was delighted with my endeavours to accost the postman at his front door – at least, that is what I thought. So it came as a surprise when he told me this morning, ‘Fuckin’ piece of shite, your code. A car caused it to trigger’. Granted, I hadn’t accounted for an automobile engaging with the system, and so set about introducing face detection to the Python program, so that it only struck up conversation with humans.

The OpenCV Python Tutorials provided the code, which I wrapped into my Webcam class:

import cv2

from datetime import datetime

class Webcam(object):

WINDOW_NAME = "Arkwood's Surveillance System"

# constructor

def __init__(self):

self.webcam = cv2.VideoCapture(0)

# save image to disk

def _save_image(self, path, image):

filename = datetime.now().strftime('%Y%m%d_%Hh%Mm%Ss%f') + '.jpg'

cv2.imwrite(path + filename, image)

# obtain changes between images

def _delta(self, t0, t1, t2):

d1 = cv2.absdiff(t2, t1)

d2 = cv2.absdiff(t1, t0)

return cv2.bitwise_and(d1, d2)

# detect faces in webcam

def detect_faces(self):

# get image from webcam

img = self.webcam.read()[1]

# do face/eye detection

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = img[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray)

for (ex,ey,ew,eh) in eyes:

cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

# save image to disk

self._save_image('WebCam/Detection/', img)

# show image in window

cv2.imshow(self.WINDOW_NAME, img)

cv2.waitKey(1000)

# tidy and quit

cv2.destroyAllWindows()

if len(faces) == 0:

return False

return True

# wait until motion is detected

def detect_motion(self):

# set motion threshold

threshold = 170000

# hold three b/w images at any one time

t_minus = cv2.cvtColor(self.webcam.read()[1], cv2.COLOR_RGB2GRAY)

t = cv2.cvtColor(self.webcam.read()[1], cv2.COLOR_RGB2GRAY)

t_plus = cv2.cvtColor(self.webcam.read()[1], cv2.COLOR_RGB2GRAY)

# now let's loop until we detect some motion

while True:

# obtain the changes between our three images

delta = self._delta(t_minus, t, t_plus)

# display changes in surveillance window

cv2.imshow(self.WINDOW_NAME, delta)

cv2.waitKey(10)

# obtain white pixel count i.e. where motion detected

count = cv2.countNonZero(delta)

# debug

#print (count)

# if the threshold has been breached, save some snaps to disk

# and get the hell out of function...

if (count > threshold):

self._save_image('WebCam/Motion/', delta)

self._save_image('WebCam/Photograph/', self.webcam.read()[1])

cv2.destroyAllWindows()

return True

# ...otherise, let's handle a new snap

t_minus = t

t = t_plus

t_plus = cv2.cvtColor(self.webcam.read()[1], cv2.COLOR_RGB2GRAY)

The xml files required for face and eye detection can be found on GitHub: haarcascade_frontalface_default.xml and haarcascade_eye.xml

The Webcam class now has two public functions, detect_motion and detect_faces. detect_motion was discussed in my previous post, and is all to do with waiting until something moves in front of the webcam and triggers a threshold. detect_faces is the new function, which takes a snap from the webcam and determines whether the motion that triggered the threshold was a human or, say, vehicle. If it’s got a face and eyes then it’s a human, was my logic.

The rest of the program flows as in said previous post, using Google’s text to speech and speech to text services to converse with the visitor. Lovely.

from webcam import Webcam

from speech import Speech

webcam = Webcam()

speech = Speech()

# wait until motion detected at front door

webcam.detect_motion()

# if faces at front door

if (webcam.detect_faces()):

# ask visitor for identification

speech.text_to_speech("State your name punk")

# capture the visitor's reply

visitor_name = speech.speech_to_text('/home/pi/PiAUISuite/VoiceCommand/speech-recog.sh')

# ask visitor if postman

speech.text_to_speech("Are you the postman " + visitor_name)

# capture the visitor's reply

is_postman = speech.speech_to_text('/home/pi/PiAUISuite/VoiceCommand/speech-recog.sh')

# if postman, provide instruction

if (is_postman == "yes"):

speech.text_to_speech("Please leave the parcel at the back gate and leave")

else:

speech.text_to_speech("Fuck off")

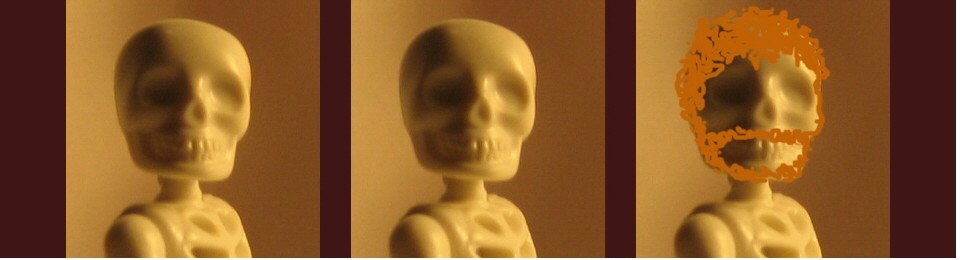

Before installing the system to monitor my Belgian friend’s front door, I thought it best to take it for a test run. Click the image to see me playing the part of the postman and having my face clocked.

It worked a charm, so I took the gear round to Arkwood’s and set it up to wait for his first visitor. Rubbing my hands with glee, I trotted home and waited for my buddy to telephone with the good news.

The telephone rung a few hours later. Arkwood was in a rage.

‘A fuckin’ squirrel set the motion detection off! What a crock of crap.’

Hm. I hadn’t accounted for a furry rodent having a face and a set of eyes. Damn. Still, it gives me a good excuse to have a go at some facial recognition. Maybe I’ll even get a match on the postman without having to ask him for identification.

Ciao!

hi ,

i have the pasted the program with the filename of faceeyedetect.py.i had downloaded the both the .xml file.how to run the program? when i give python faceeyedetect.py i am not getting any o/p.

Awaiting for your reply

regards,

Praveen Kumar

Hi Praveen,

I used IDLE on the Raspberry Pi to run the program. Paste the Webcam class into a file called webcam.py. Paste the main program (the second code sample) into a file in the same directory (you can call this file anything you want). Put the .xml files in the same directory. Create subdirectories to store the webcam images as per the code. Open the main program in IDLE and run it.

There’s some code in the main program to do with Google’s Text To Speech / Speech To Text services – you can look at the previous post about this, or just comment it out if you’re only interested in the webcam stuff.

You’ll need to get the OpenCV cv2 python package installed also.

Let me know if you’re still getting errors, cheers

Pingback: Notes on a TV as Radio prototype | PlanB

What are the performance to recognize the face? I’ve tried on my own a simple script. It takes 6 sec!!!

import cv2

import time

start = time.time()

classifier = cv2.CascadeClassifier(‘haarcascade_frontalface_default.xml’)

frame = cv2.imread(‘file.jpg’)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

detected_faces = classifier.detectMultiScale(

gray,

scaleFactor=1.3,

minNeighbors=5

)

print(time.time() – start)

print(“Detected ” + str(len(detected_faces)) + ” faces”)

# Draw a rectangle around the faces

for (x, y, w, h) in detected_faces:

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

cv2.imwrite(‘detected.jpg’, frame)

start = time.time()

Hi. So this got me going a bit nuts on the testing 🙂

Summmary:

Note: haarcascade_roundabout.xml and roundabout1.png are available at my cascade classifier repository https://onedrive.live.com/redir?resid=74B6CEA107C215CA%21107

Note: I ran your script without the loop to draw the rectangles (and just face detection, not eyes).

Device: Raspberry Pi

Source: Webcam

Image size: 101KB (640w 480h 72ppi)

Cascade: haarcascade_frontalface_default.xml (940KB)

Time to run script: 8.6sec

Device: Raspberry Pi

Source: Webcam

Image size: 101KB (640w 480h 72ppi)

Cascade: haarcascade_roundabout.xml (69KB)

Time to run script: 3.2sec

Device: Windows7 PC

Source: roundabout1.png

Image size: 3.02MB (1679w 926h 72ppi)

Cascade: haarcascade_frontalface_default.xml (940KB)

Time to run script: 0.7sec

Device: Raspberry Pi

Source: roundabout1.png

Image size: 3.02MB (1679w 926h 72ppi)

Cascade: haarcascade_frontalface_default.xml (940KB)

Time to run script: 39.6sec

Device: Windows7 PC

Source: roundabout1.png

Image size: 3.02MB (1679w 926h 72ppi)

Cascade: haarcascade_roundabout.xml (69KB)

Time to run script: 0.3sec

Device: Raspberry Pi

Source: roundabout1.png

Image size: 3.02MB (1679w 926h 72ppi)

Cascade: haarcascade_roundabout.xml (69KB)

Time to run script: 15.4sec

Device: Windows7 PC

Source: face.jpg

Image size: 3.51MB (4608w 3456h 300ppi)

Cascade: haarcascade_frontalface_default.xml (940KB)

Time to run script: 6.6sec

Device: Raspberry Pi

Source: face.jpg

Image size: 3.51MB (4608w 3456h 300ppi)

Cascade: haarcascade_frontalface_default.xml (940KB)

Time to run script: 419.2sec

Device: Windows7 PC

Source: face.jpg

Image size: 3.51MB (4608w 3456h 300ppi)

Cascade: haarcascade_roundabout.xml (69KB)

Time to run script: 3.7sec

Device: Raspberry Pi

Source: face.jpg

Image size: 3.51MB (4608w 3456h 300ppi)

Cascade: haarcascade_roundabout.xml (69KB)

Time to run script: 204.8sec

Hi, firstly thank you ver much for this amazing tutorial 🙂

I put xml files and code files(.py files) when i run Arkwood’s Surveillance System .py file with IDLE i am not taking any error but it is not working, noting happens and webcam does not start to work. Do u have a any suggestion or solution about this problem?

Hi Oguzhan,

You could debug each line, to find what’s failing e.g. “print (count)” to determine if motion detection greater than threshold. Also, if you are only interested in face detection, just comment out the motion detection and speech functionality. To make sure your webcam works, try:

import cv2

img = cv2.VideoCapture(0).read()[1]

cv2.imshow(‘Test’, img)

cv2.waitKey(10000)

Your tutorials are amazing and quite detailed. I’ve just started looking into opencv.

I’m currently working on detecting microsleep and drowsiness using opencv on a Raspberry. Your tutorial really helped me get started. Any feedbacks on how to go about detecting whether the eye remains closed for a fixed threshold time.

Hi there,

Great idea! Face detection will provide the region for each eye in the image, so I would suggest starting with something simple like a colour threshold i.e. if there is a certain number of white pixels then the eye must be open, otherwise closed (greyscale analysis might also work). Just put the whole thing in a loop and keep track of time – if the eye is closed for more than a few seconds then play an audio alarm to wake the dozy dude up.

Sir I am trying the code it is giving me the error “ImportError: No module named cv2”

my pi3 installation is fresh only opencv 3.1.0 is installed as directed by Pyimagesearch.com install guide

Hi. Been a while since I’ve been on a Pi sorry, check out the comments here

thanks sir for the reply i will try it out

regards